Click here to preview the secondary data preregistration template on OSF.

Outside of science, “double-dipping” refers to dipping a chip after having already taken a bite. Inside of science, “double-dipping” refers to testing a statistical hypothesis using the same data it was derived from. While it is not really clear that the former is harmful, it is quite clear that the latter is. Scientific double-dipping is likely to have adverse consequences because a researcher tests a hypothesis using data they are already familiar with. Therefore, it is likely that they are not blind to the outcome of the hypothesis test anymore, which defeats the purpose of doing the test at all. In Popperian terms: the hypothesis cannot be falsified; in Mayonian terms: the hypothesis cannot be severely tested.

The problematic nature of “double-dipping” has been widely acknowledged, among others by Ben Goldacre who claims that this practice “makes the stats go all wonky” and Eric-Jan Wagenmakers who insists that this practice amounts to “torturing the data until they confess” (a famous statistics quip first coined by Ronald Coase). More formally: statistical tests based on double dipping are likely to lead to false positive findings, and these false positive findings arise because accidental findings are presented as predicted from the start (i.e., the researchers Hypothesize After the Results are Known; they HARK). Some prominent examples of such false positives are the finding that dead salmon can come back to life, the finding that people can predict the future, and the finding that you get younger when listening to the Beatles. While these findings are obviously false, other published false positive results are hard to identify, raising doubts about the validity of research findings across the sciences. One solution to counter this problem is preregistration, where the hypothesis, research design, variables, statistical test, and inference criteria are registered before data collection to prevent double dipping and HARKing.

But is it always a problem if a researcher uses data they have prior knowledge about? You could argue that testing a hypothesis based on data you are familiar with is perfectly reasonable when your familiarity is not directly related to the hypothesis. What if you revisit a dataset you worked with for your master’s thesis and want to test a hypothesis that is tangentially related to the one in your master’s thesis? And what if you want to determine the boundary conditions of an effect and want to test an auxiliary hypothesis using the same data you used to test the main hypothesis? It quickly becomes clear that there is a large grey area where researchers have some knowledge but not perfect knowledge about the data. We have no intention to decide on the types of prior knowledge for which hypothesis tests are and aren’t allowed, but what we do want to do is suggest a practice that makes it possible for others to assess researchers’ prior knowledge: the preregistration of secondary data analyses.

While the negative press about double dipping and torturing data may have led some researchers to harbor the idea that secondary data analyses cannot be preregistered, this idea is not correct. Quite the opposite in fact: we would argue that preregistration is even more useful for secondary data analyses than for primary data analyses because knowing about your prior knowledge is crucial for others to determine the validity of your analyses. Furthermore, preregistering secondary data analyses has the additional advantages that typical preregistration also has. For example, preregistration forces you to think clearly about whether your research design and statistical analyses match your theoretical framework and are therefore likely to lead to more methodologically rigorous studies.

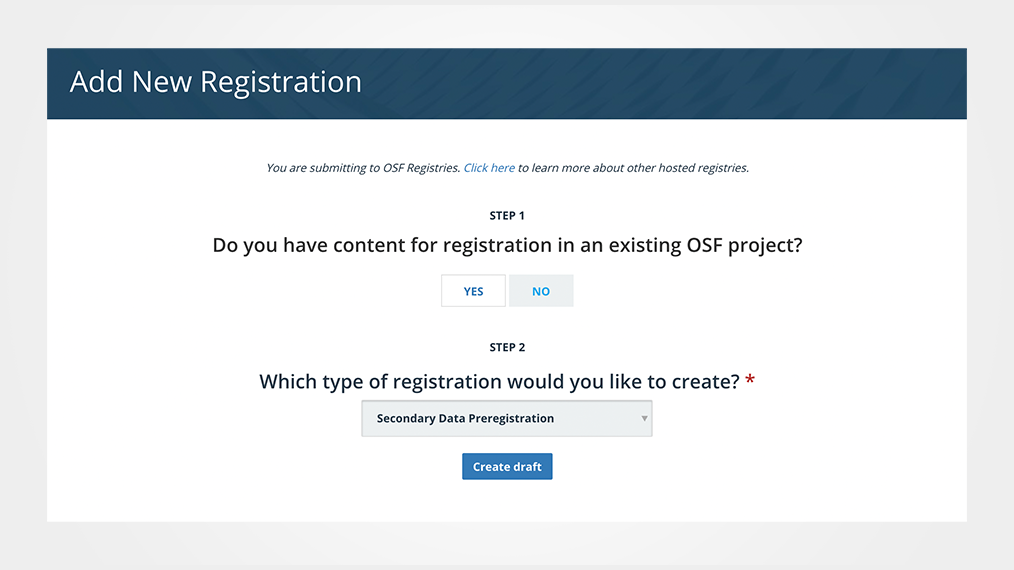

However, preregistering secondary data analyses does come with some unique challenges that were not addressed in the available preregistration templates. Therefore, a group of experts in secondary data analysis and preregistration came together during a session at the conference of the Society for the Improvement of Psychological Science (SIPS) in 2018 to create a template that researchers could use to preregister their secondary data analyses. A rough draft was finalized right after the conference, and was subsequently refined during several rounds of feedback. This new preregistration template is based on the extensive OSF prereg template (formerly the prereg challenge template), and has several additional questions to establish the prior knowledge a researcher has about the data. This blog post accompanies the launch of our template as an ‘official’ OSF template. If you want extensive guidance on how to use the template you can check out the special tutorial paper we wrote for the template.

As the practices of (secondary) data analysis and preregistration are likely to change in the future, the template will be a work in progress for the foreseeable future. Therefore, please do relay your experiences with the template and any difficulties that you may have encountered while using it. This allows us to continuously improve the template and make the process of preregistration more accessible. Admittedly, preregistering a secondary data analaysis (or any analysis for that matter) can be hard, but hopefully our template and the accompanying tutorial can convince you that it is worthwhile and feasible. We strongly believe that preregistering secondary data analyses will make your work and science as a whole more transparent, reproducible, and replicable.

Access a recorded demonstration of the template with best practices for preregistering secondary data.

6218 Georgia Avenue NW, Suite #1, Unit 3189

Washington, DC 20011

Email: contact@cos.io

Unless otherwise noted, this site is licensed under a Creative Commons Attribution 4.0 International (CC BY 4.0) License.

Responsible stewards of your support

COS has earned top recognition from Charity Navigator and Candid (formerly GuideStar) for our financial transparency and accountability to our mission. COS and the OSF were also awarded SOC2 accreditation in 2023 after an independent assessment of our security and procedures by the American Institute of CPAs (AICPA).

We invite all of our sponsors, partners, and members of the community to learn more about how our organization operates, our impact, our financial performance, and our nonprofit status.